As artificial intelligence becomes increasingly integrated into enterprise operations, organizations face a new landscape of security challenges that extend far beyond traditional cybersecurity concerns. Enterprise AI systems present unique vulnerabilities that can compromise data integrity, expose sensitive information, and create new attack vectors for malicious actors. This comprehensive guide examines the critical security risks associated with AI implementation in enterprise environments and provides actionable strategies for mitigation. Let’s understand the Enterprise AI Risk.

Introduction: The AI Security Imperative

The rapid adoption of AI technologies across industries has created unprecedented opportunities for innovation and efficiency. However, this digital transformation comes with significant security implications that many organizations are only beginning to understand. Unlike traditional software systems, AI models operate with inherent uncertainties, learn from data that may contain biases or vulnerabilities, and can be manipulated in ways that are difficult to detect and prevent.

Enterprise AI security risks encompass not only the protection of AI systems themselves but also the broader implications of AI-driven decision-making on organizational security posture. As AI systems become more autonomous and influential in business processes, the potential impact of security breaches extends beyond data loss to include operational disruption, regulatory compliance failures, and erosion of stakeholder trust.

Core Categories of Enterprise AI Risks

1. Data Security and Privacy Risks

Data Poisoning Attacks One of the most insidious threats to AI systems involves the deliberate introduction of malicious data into training datasets. Attackers can subtly corrupt training data to influence model behavior in ways that benefit their objectives while remaining undetected during normal operation. This type of attack is particularly dangerous because it can persist throughout the model’s lifecycle and may only manifest under specific conditions.

Training Data Exposure AI models can inadvertently memorize and later reveal sensitive information from their training data. This phenomenon, known as training data leakage, poses significant risks when models are trained on proprietary business information, personal customer data, or confidential documents. Attackers can use various techniques to extract this information, potentially violating privacy regulations and exposing competitive advantages.

Inference-Time Data Breaches During deployment, AI systems continuously process sensitive data, creating multiple opportunities for exposure. Inadequate access controls, insecure API endpoints, and insufficient encryption can allow unauthorized parties to intercept or manipulate data as it flows through AI pipelines.

2. Model Security Vulnerabilities

Adversarial Attacks AI models are vulnerable to carefully crafted inputs designed to cause misclassification or unexpected behavior. These adversarial examples can be imperceptible to humans but can cause AI systems to make incorrect decisions with high confidence. In enterprise contexts, such attacks could manipulate fraud detection systems, autonomous vehicles, or medical diagnosis tools.

Model Inversion and Extraction Sophisticated attackers can reverse-engineer AI models to understand their internal structure and training data. Model extraction attacks allow adversaries to create functionally equivalent models without access to the original training data, potentially undermining competitive advantages and intellectual property protection.

Prompt Injection and Manipulation Large language models and generative AI systems are particularly susceptible to prompt injection attacks, where malicious inputs are designed to override system instructions or extract sensitive information. These attacks can be especially problematic in customer-facing applications or automated decision-making systems.

3. Infrastructure and Deployment Risks

Supply Chain Vulnerabilities The AI ecosystem relies heavily on third-party components, including pre-trained models, datasets, and development frameworks. Each element in this supply chain represents a potential security risk, as malicious actors could introduce vulnerabilities or backdoors that persist across multiple deployments.

Cloud and Edge Security Challenges AI workloads often span multiple environments, from cloud-based training platforms to edge devices for inference. This distributed architecture creates complex security challenges, including securing data in transit, managing access controls across different environments, and ensuring consistent security policies.

Container and Orchestration Risks Modern AI deployments frequently use containerized applications and orchestration platforms like Kubernetes. While these technologies offer scalability and flexibility, they also introduce new attack vectors and require specialized security expertise to configure properly.

4. Operational and Governance Risks

Lack of AI Governance Many organizations deploy AI systems without adequate governance frameworks, leading to inconsistent security practices, unclear accountability, and difficulty in managing AI-related risks across the enterprise. Without proper governance, organizations cannot effectively monitor, assess, or respond to AI security threats.

Insufficient Monitoring and Auditability AI systems often operate as “black boxes,” making it difficult to detect when they have been compromised or are behaving unexpectedly. The lack of interpretability in many AI models complicates security monitoring and incident response efforts.

Regulatory Compliance Challenges The evolving regulatory landscape around AI creates compliance risks, particularly in heavily regulated industries. Organizations must navigate complex requirements around data protection, algorithmic transparency, and bias prevention while maintaining security standards.

Industry-Specific Risk Profiles

Financial Services

Financial institutions face unique AI security challenges due to the sensitive nature of financial data and the high-stakes environment of financial decision-making. AI systems used for fraud detection, credit scoring, and algorithmic trading are particularly attractive targets for sophisticated attackers.

Healthcare

Healthcare AI systems process highly sensitive patient data and make decisions that can directly impact patient outcomes. Security breaches in healthcare AI can lead to privacy violations, medical errors, and loss of patient trust.

Manufacturing and Industrial IoT

Industrial AI systems control critical infrastructure and manufacturing processes. Security compromises in these systems can lead to production disruptions, safety incidents, and intellectual property theft.

Government and Defense

Government AI systems handle classified information and support national security operations. These systems face advanced persistent threats and require the highest levels of security assurance.

Emerging Threat Landscape

AI-Powered Cyberattacks

Malicious actors are increasingly using AI to enhance their attack capabilities, creating more sophisticated phishing campaigns, automating vulnerability discovery, and developing adaptive malware that can evade traditional security measures.

Deepfakes and Synthetic Media

The proliferation of deepfake technology poses new risks to enterprise security, including impersonation attacks, disinformation campaigns, and social engineering attacks that leverage synthetic audio and video content.

Supply Chain AI Risks

As AI components become more commoditized, the risk of supply chain attacks increases. Malicious actors may target AI development tools, pre-trained models, or cloud-based AI services to gain access to enterprise systems.

Risk Assessment and Management Framework

Risk Identification and Classification

Organizations must develop systematic approaches to identify and classify AI-related security risks. This process should consider the specific use cases, data types, and business impact of each AI system.

Threat Modeling for AI Systems

Traditional threat modeling approaches must be adapted for AI systems, considering unique attack vectors such as adversarial examples, model extraction, and training data poisoning.

Continuous Risk Monitoring

AI security risks evolve rapidly, requiring continuous monitoring and assessment. Organizations should implement automated monitoring tools and establish processes for regular risk reviews.

Mitigation Strategies and Best Practices

Secure AI Development Lifecycle

Implementing security throughout the AI development lifecycle is crucial for managing risks. This includes secure data collection and preparation, secure model training and validation, and secure deployment and monitoring.

Data Protection and Privacy

Organizations must implement robust data protection measures, including encryption, access controls, and privacy-preserving techniques such as differential privacy and federated learning.

Model Security Controls

Protecting AI models requires specialized security controls, including adversarial training, model watermarking, and secure model serving architectures.

Infrastructure Security

Securing the infrastructure that supports AI systems requires attention to cloud security, container security, and network segmentation.

Incident Response and Recovery

Organizations must develop AI-specific incident response procedures and establish processes for model rollback and recovery in case of security incidents.

Regulatory and Compliance Considerations

Global AI Regulations

The regulatory landscape for AI is rapidly evolving, with new laws and guidelines emerging in jurisdictions around the world. Organizations must stay informed about relevant regulations and ensure compliance.

Industry Standards and Frameworks

Various industry standards and frameworks are being developed to address AI security risks. Organizations should align their practices with relevant standards and participate in industry initiatives.

Audit and Assurance

Regular audits and third-party assessments are essential for validating AI security controls and demonstrating compliance with regulatory requirements.

Technology Solutions and Tools

AI Security Platforms

Specialized AI security platforms are emerging to address the unique challenges of securing AI systems. These platforms provide capabilities for model monitoring, adversarial attack detection, and AI governance.

Security Testing Tools

Organizations need specialized tools for testing AI systems against security threats, including adversarial attack simulators and model extraction detection systems.

Monitoring and Observability

Comprehensive monitoring and observability solutions are crucial for detecting and responding to AI security incidents in real-time.

Building an AI Security Program

Organizational Structure

Establishing clear roles and responsibilities for AI security is essential. This may include creating new positions such as AI security officers or expanding existing security teams.

Skills and Training

Building AI security capabilities requires specialized skills and training. Organizations must invest in developing internal expertise or partnering with external specialists.

Vendor Management

Managing AI security risks requires careful evaluation and management of AI vendors and service providers. Organizations should establish clear security requirements and conduct regular assessments.

Future Trends and Considerations

Quantum Computing Impact

The advent of quantum computing may significantly impact AI security, potentially rendering current cryptographic protections obsolete and requiring new approaches to model protection.

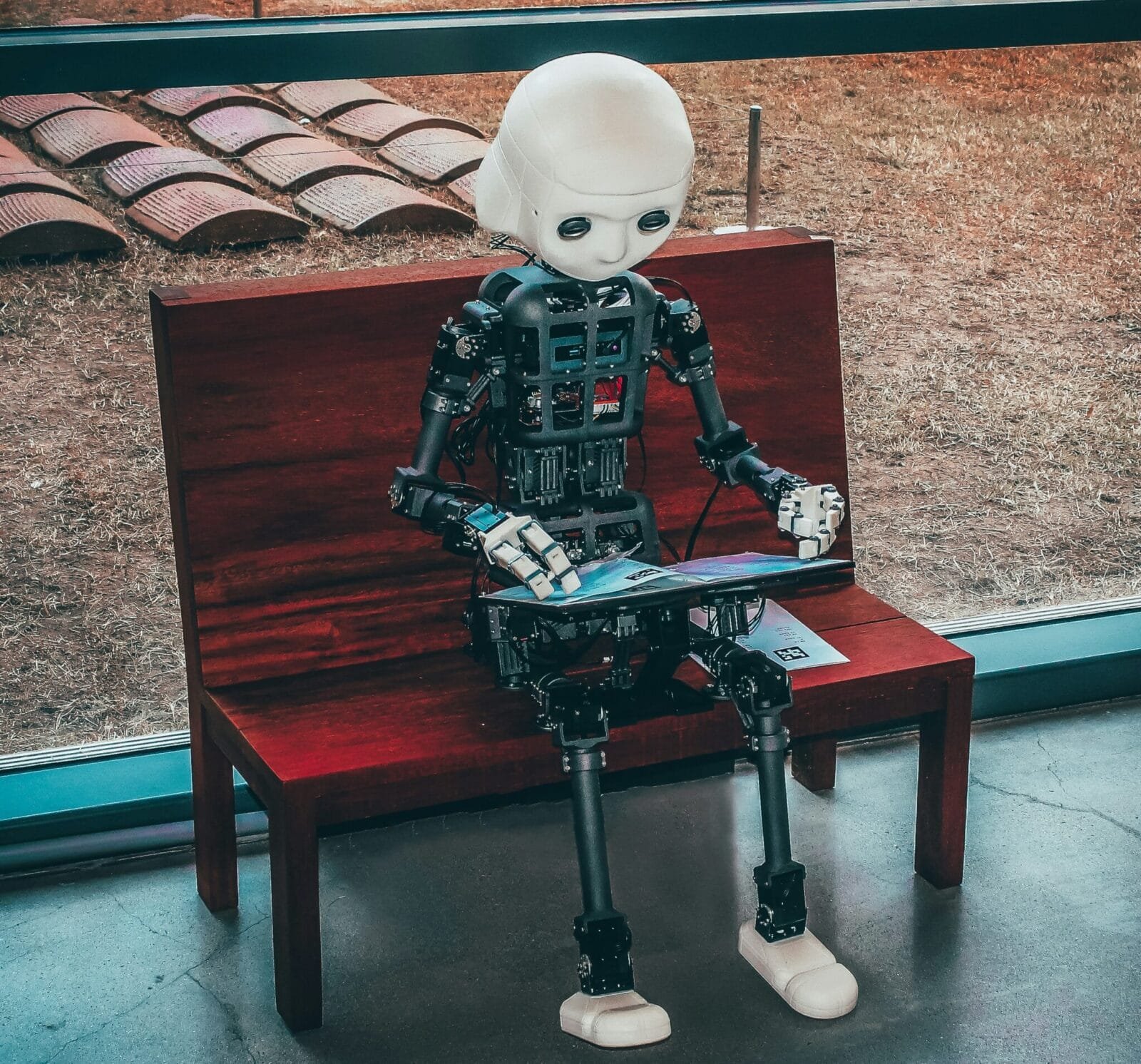

Autonomous AI Systems

As AI systems become more autonomous, security challenges will increase. Organizations must prepare for scenarios where AI systems make security-relevant decisions without human oversight.

Regulatory Evolution

The regulatory landscape for AI will continue to evolve, requiring organizations to maintain flexibility in their security approaches and compliance strategies.

Final Thought

Enterprise AI security risks represent a fundamental challenge that requires comprehensive, proactive approaches. Organizations that successfully navigate these risks will gain significant competitive advantages while protecting their stakeholders and maintaining regulatory compliance. The key to success lies in understanding the unique characteristics of AI security risks, implementing appropriate controls and governance frameworks, and maintaining continuous vigilance as the threat landscape evolves.

The investment in AI security is not merely a defensive measure but a strategic imperative that enables organizations to realize the full potential of AI while maintaining the trust and confidence of their stakeholders. As AI continues to transform business operations, those organizations that prioritize security will be best positioned to thrive in an AI-driven future.

Recommendations for Action

Organizations should begin by conducting comprehensive AI security risk assessments, developing appropriate governance frameworks, and investing in the necessary tools and expertise to manage these risks effectively. The time to act is now, as the cost of addressing AI security risks proactively is significantly lower than the potential impact of security incidents.

By taking a strategic approach to AI security, organizations can unlock the transformative potential of artificial intelligence while maintaining the security and trust that are essential for long-term success.

Leave a comment